What is ADC 4 Years ago

An analog-to-digital converter (ADC, A/D, or A-to-D) is a system that converts an analog signal, such as sound entering a microphone or light entering a digital camera, into a digital signal.

Also, ADC provides the possibility of measuring analog voltage or current input to an electronic device in isolation. This device can be a voltage or current indicator. Normally, the ADC output is a binary number corresponding to the input. Of course, ADCs also provide other possibilities. such as differential measurement, input signal amplification and…

Today, ADCs are produced as IC integrated circuits along with analog circuits (amplifier, comparator, limiter, etc.) and digital circuits (shift register and communication circuits, etc.).

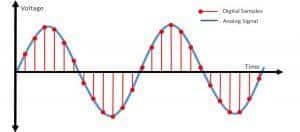

An ADC converts a continuous-time, continuous-amplitude analog signal into a discrete-time, discrete-amplitude digital signal. ADC is not capable of continuous conversion, so ADC samples the input signal and after converting the sample to a digital value, moves to the next sampling.

ADC performance is primarily determined by bandwidth (sampling rate) and signal-to-noise ratio (SNR). An ADC is affected by many factors, including resolution, linearity, and accuracy (how closely the digital value matches the actual analog signal), etc. The SNR of an ADC is often summarized in terms of its effective number of bits (ENOB), the number of bits from each conversion that is noise-free is called the effective number of bits. An ideal ADC has an ENOB equal to its resolution. ADCs are selected according to the conversion speed and SNR, or in other words, the accuracy required in the conversion.

A higher conversion rate means more samples are taken over time. The higher the sampling speed, the more information received from the signal, and more accuracy helps to have more accurate information from the sample.